Global Wireless Solutions (GWS) evaluates wireless networks according to the company’s OneScore methodology. At the moment, AT&T cites GWS’s results in commercials where AT&T claims to offer the best network.

In an article about performance tests of wireless networks, GWS’s founder, Dr. Paul Carter, writes:1

Unfortunately, GWS itself is not especially transparent about its methodology. The public-facing information about the company’s methodology is sparse, and I did not receive a response to my email requesting additional information.

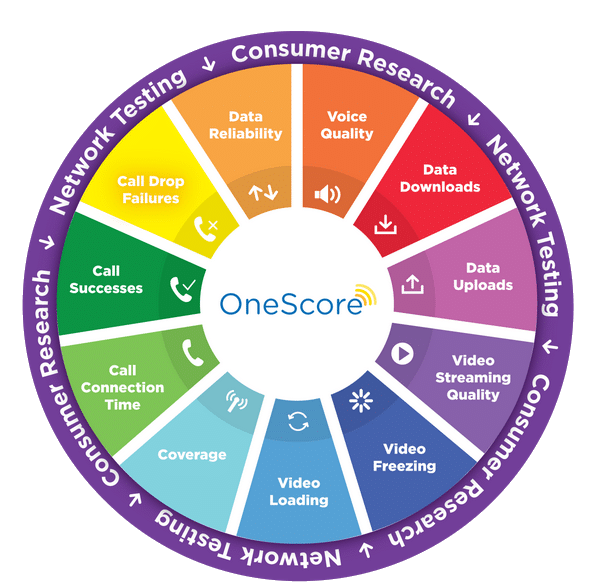

As I understand it, GWS’s methodology has two components:

- Technical performance testing in about 500 markets

- Consumer surveying that helps determine how much weight to give different metrics

Technical testing

In 2019, GWS conducted extensive drive testing; GWS employees drove close to 1,000,000 miles as phones in their vehicles performed automated tests of networks’ performance.2

The drive testing took place in about 500 of the markets, including all of the largest metropolitan areas. GWS says the testing represents about 94% of the U.S. population.3 I expect that GWS’s focus on these markets limits the weight placed on rural and remote areas. Accordingly, GWS’s results may be biased against Verizon (Verizon tends to have better coverage than other networks in sparsely populated areas).

Consumer surveying

In 2019, GWS surveyed about 5,000 consumers to figure out how much they value different aspects of wireless performance.4 GWS finds that consumers place a lot of importance on phone call voice quality, despite the fact the people are using their phones for more and more activities unrelated to phone calls.5 GWS also finds that, as I’ve suggested, consumers care a lot more about the reliability of their wireless service than its raw speed.6

Combining components

As I understand it, GWS draws on the results of its surveying to decide how much weight to place of different aspects parts of the technical performance tests:

The methodology’s name, OneScore, and the graphic below suggest that the company combines all of its data to arrive at final, numerical scores for each network:7

Oddly enough, I can’t find GWS publishing anything that looks like final scores. That may be a good thing. I’ve previously gone into great detail about why scoring systems that use weighted rubrics to give companies or products a single, overall score tend to work poorly.

2019 Results

In GWS’s 2019 report, the company lists which networks had the best performance in several different areas:

AT&T:

- Download speed

- Data reliability

- Network capacity

- Video streaming experience

- Voice accessibility

- Voice retainability

T-Mobile:

- Voice quality

Verizon:

- Upload speed

Open questions

I have a bunch of open questions about GWS’s methodology. If you represent GWS and can shed light on any of these topics, please reach out.

- Does the focus on 501 markets (94% of the U.S.) tend to leave out rural areas where Verizon has a strong network relative to other operators?

- Do operators pay GWS? Does AT&T pay to advertise GWS’s results?

- What does the consumer survey entail?

- How directly are the results of the consumer survey used to determine weights used later in GWS’s analysis?

- What does GWS make of the discrepancies between its results and those of RootMetrics?

- How close were different networks’ scores in each category?

- GWS shares the best-performing network in several categories. Is information available about the second, third, and fourth-place networks in each category?

- Does GWS coerce its raw data into a single overall score for each network?

- Are those results publicly available?

- How are the raw performance data coerced into scores that can be aggregated?