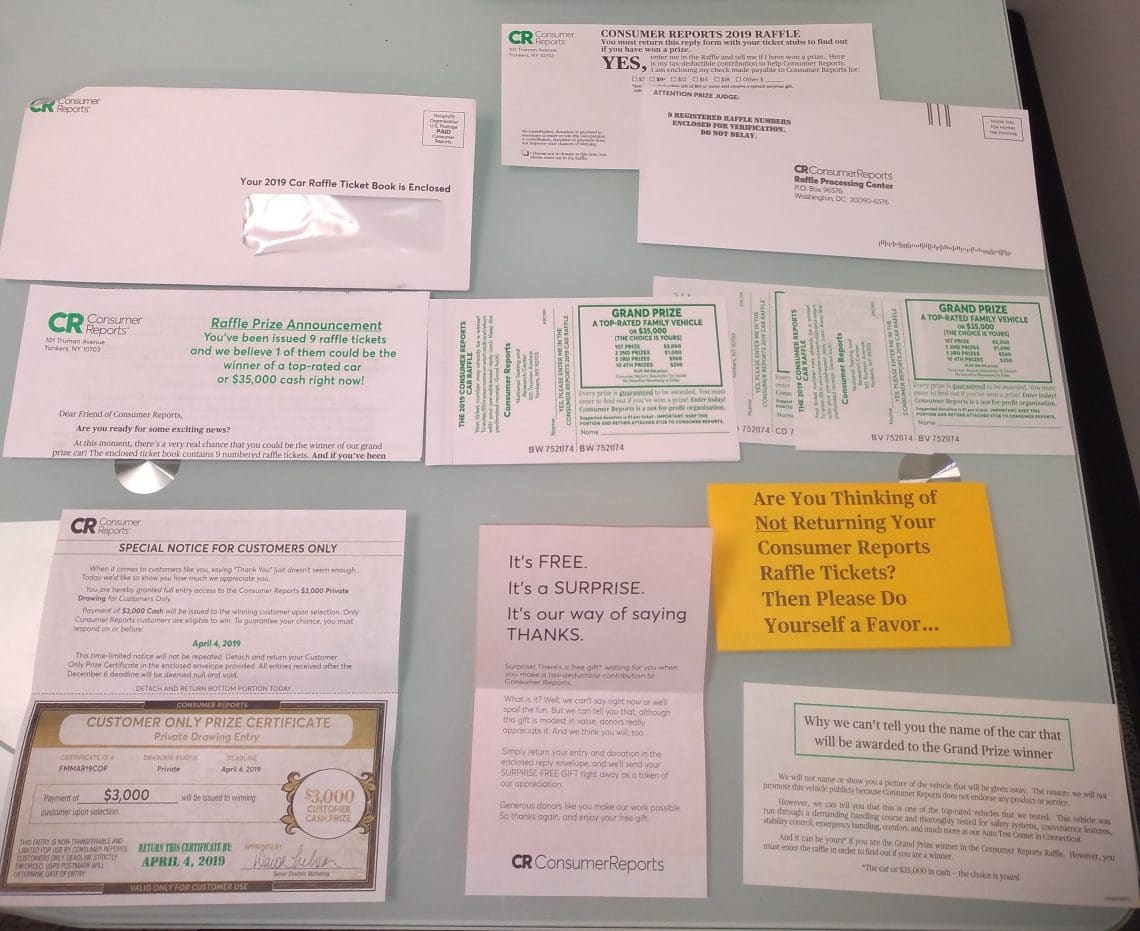

The other day, I received a mailing from Consumer Reports. It was soliciting contributions for a raffle fundraiser. The mailing had nine raffle tickets in it. Consumer Reports was requesting that I send back the tickets with a suggested donation of $9 (one dollar for each ticket). The mailing had a lot of paper:

The raffle had a grand prize that would be the choice of an undisclosed, top-rated car or $35,000. There were a number of smaller prizes bringing the total amount up for grabs to about $50,000.

The materials included a lot of gimmicky text:

- “If you’ve been issued the top winning raffle number, then 1 of those tickets is definitely the winner or a top-rated car — or $35,000 in cash.”

- “Why risk throwing away what could be a huge pay day?”

- “There’s a very real chance you could be the winner of our grand prize car!”

Consumer Reports also indicates that they’ll send a free, surprise gift to anyone who donates $10 or more. It feels funny to donate money hoping that I might win more than I donate, but I get it. Fundraising gimmicks work. That said, I get frustrated when fundraising gimmicks are dishonest.

One of the papers in the mailing came folded with print on each side. Here’s the front:

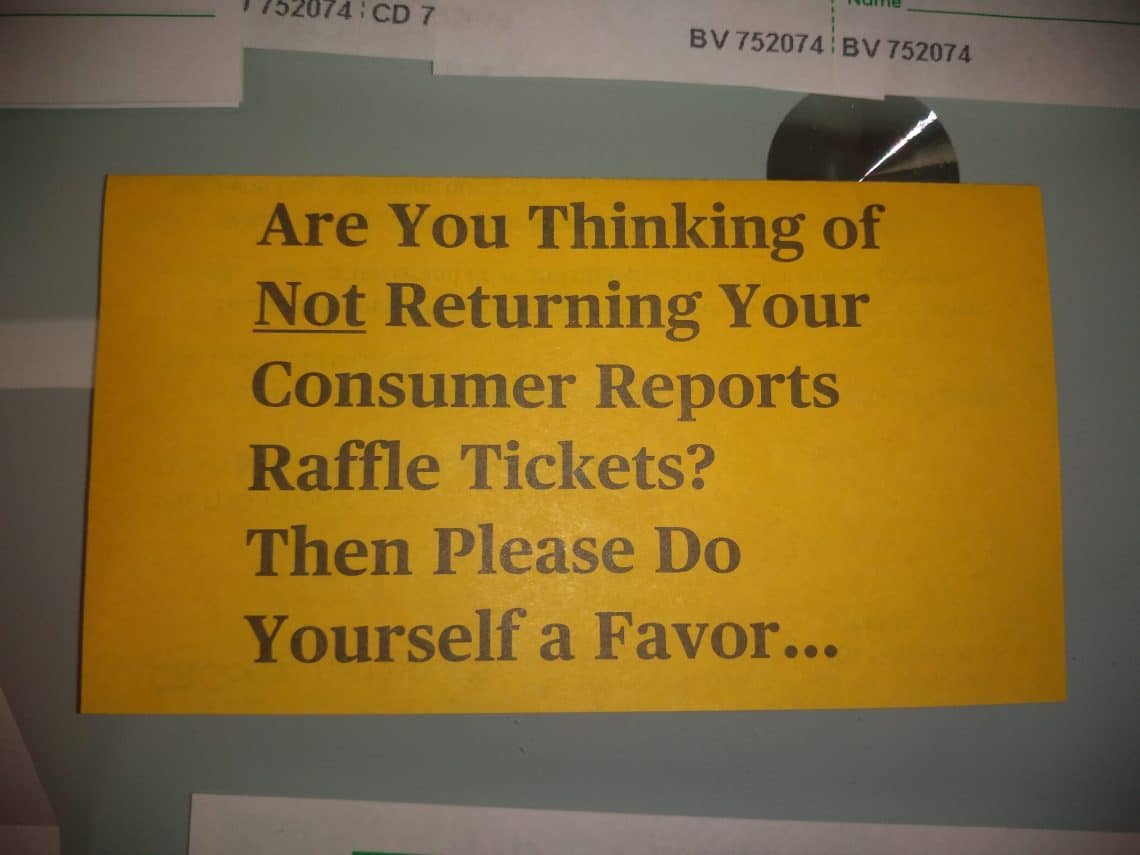

On the other side, I found a letter from someone involved in Consumer Reports’ marketing. The letter argues that it would be silly for me not to find out if I received winning tickets:

The multiple tickets bit is silly. It’s like the Yogi Berra line at the opening of the post; cutting a pizza into more slices doesn’t create more food. It doesn’t matter how many tickets I have unless I get more tickets than the typical person.

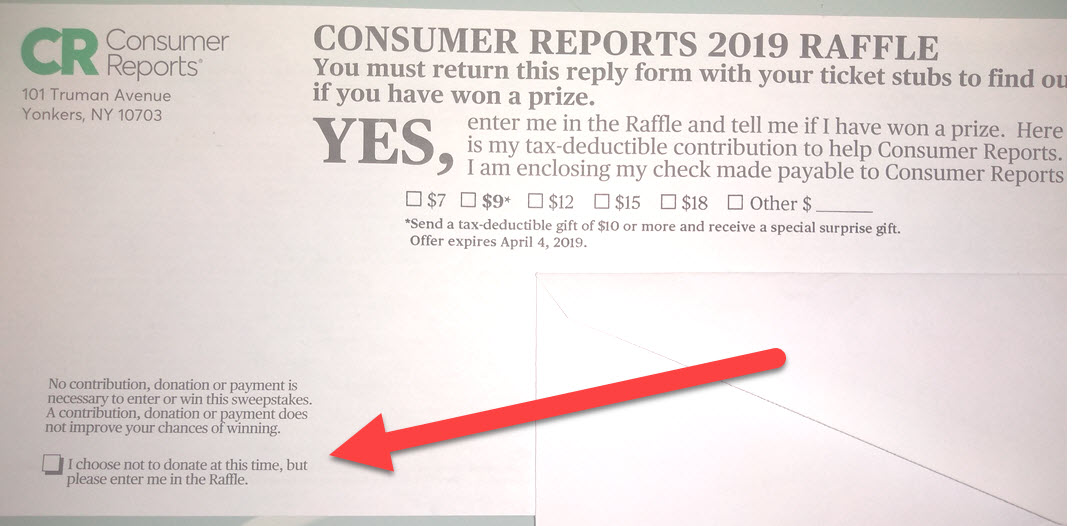

Come on. Consumer Reports doesn’t care if a non-donor decides not to turn in tickets. What’s the most plausible explanation for why Consumer Reports includes the orange letter? People who would otherwise ignore the mailing sometimes end up feeling guilty enough to make a donation. Checking the “I choose not to donate at this time, but please enter me in the Raffle” box on the envelope doesn’t feel great.

Writing my name on each ticket, reading the materials, and mailing the tickets takes time. My odds of winning are low. Stamps cost money.

Let’s give Consumer Reports the benefit of the doubt and pretend that the only reason not to participate is that stamps cost money. The appropriate stamp costs 55 cents at the moment.1 Is the expected reward for sending in the tickets greater than 55 cents?

Consumer Reports has about 6 million subscribers.2 Let’s continue to give Consumer Reports the benefit of the doubt and assume it can print everything, send mailings, handle the logistics of the raffle, and send gifts back to donors for only $0.50 per subscriber. That puts the promotion’s cost at about 3 million dollars. The $50,000 of prizes is trivial in comparison. Let’s further assume that Consumer Reports runs the promotion expecting that additional donations the promotion brings in will cover the promotion’s cost.

The suggested donation is $9. Let’s say the average, additional funding brought in by this campaign comes out to $10 per respondent.3 To break even, Consumer Reports needs to have 300,000 respondents.

With 300,000 respondents, nine tickets each, and $50,000 in prizes, the expected return is about 1.7 cents per ticket.4 Sixteen cents per person.5 Not even close to the cost of a stamp.

4/12/2019 Update: I received a second, almost-identical mailing in early April.

10/3/2019 Update: I received a few more of these mailings.