Years ago, some cell carriers introduced “soft caps” for data. Subscribers that used their allotted data could continue accessing the internet at a vastly reduced speed of 128kbps.

In some cases, this was a nice perk. A subscriber to Mint Mobile’s old 3GB plan who had run out of data might still be able to load an important email or boarding pass. In other cases, the reduced-speed data was part of a marketing gimmick. A carrier might offer a soft-capped 15GB plan and market it as an unlimited plan.

At 128kbps, things don’t only load slowly. In many cases, things stop working. Video may not stream. Websites may time out.

Softer Caps

Recently, a handful of carriers started offering soft-capped plans with less aggressive throttles. There are at least five carriers throttling download speeds to 1Mbps or higher:1

- Xfinity – 1.5Mbps

- Cox – 1.5Mbps

- AT&T Prepaid – 1.5Mbps

- Spectrum – 1Mbps

- US Mobile – 1Mbps

Props to these carriers. At 1Mbps, you can stream music, browse the internet, and use most apps normally. High-quality video streaming might not work, but almost everything else will.

With the less aggressive throttling, labeling plans unlimited is perhaps a generous framing, but it’s no longer outright bullshit.

Throttled But Prioritized

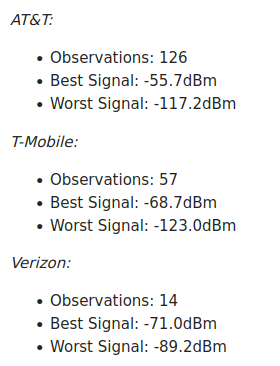

Ahmed Khattak, CEO of US Mobile, shared the following in a Reddit post (emphasis mine):

Network congestion is a common source of the sub-1Mbps speeds that cause lousy user experiences. With priority data, throttled users have some protection from congestion troubles.

I hope we see high-priority data post-throttling become a more common feature.2 Subscribers don’t use a ton of data after getting throttled. MVNOs that pay a per-gig premium for priority data may be able to offer the feature without meaningfully changing their cost structures.