Several months ago, I published a blog post arguing that Consumer Reports’ cell phone rankings were broken. This month, Consumer Reports updated those rankings with data from another round of surveying its subscribers. The rankings are still broken.

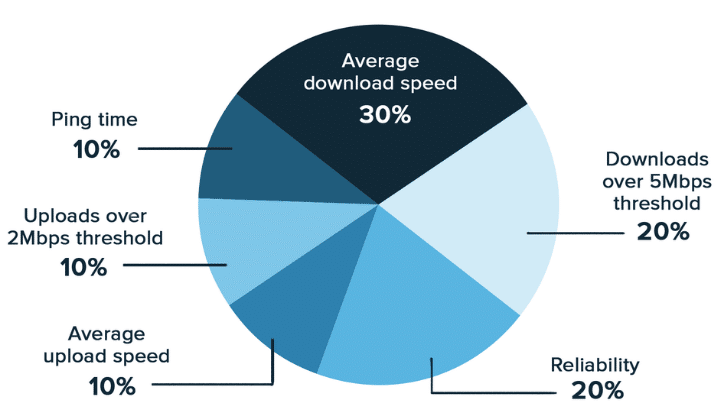

Consumer Reports slightly changed its approach this round. While Consumer Reports used to share results on 7 metrics, it now uses 5 metrics:

- Value

- Customer support

- Data

- Reception

- Telemarketing call frequency

Of the 19 carriers Consumer Reports’ assesses, only 5 operate their own network hardware.1 The other 14 carriers resell access to other companies’ networks while maintaining their own customer support teams and retail presences.2

Several of the carriers that don’t run their own network offer service over only one host network:

- Cricket Wireless – AT&T’s network

- Page Plus Cellular – Verizon’s network

- MetroPCS – T-Mobile’s network

- CREDO Mobile – Verizon’s network

- Boost Mobile – Sprint’s network

- GreatCall – Verizon’s network

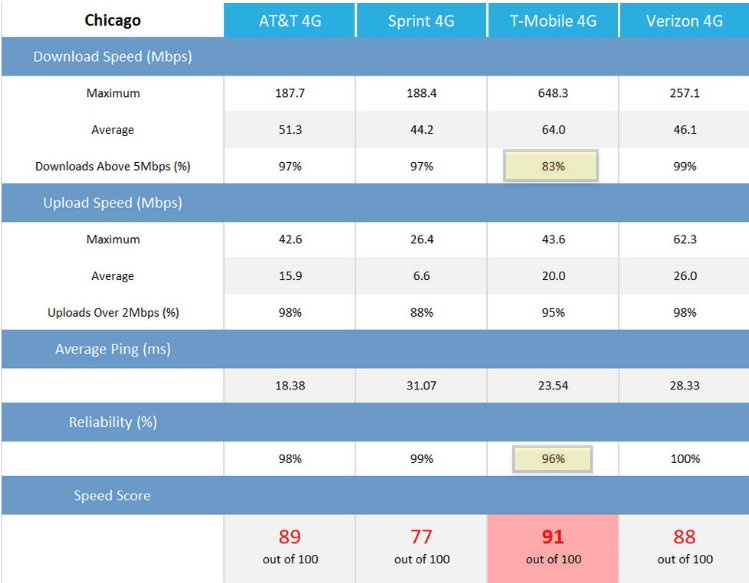

- Virgin Mobile – Sprint’s network

To test the validity of Consumer Reports’ methodology, we can compare scores on metrics assessing network quality between each of these carriers and their host network. At first glance, it looks like the reception and data metrics should both be exclusively about network quality. However, the scores for data account for value as well as quality:3

- Verizon – Good

- T-Mobile – Fair

- AT&T – Poor

- Sprint – Poor

How do those companies’ scores compare to scores earned by carriers that piggyback on their networks?

- Cricket Wireless has good reception while AT&T has poor reception.

- Page Plus and Verizon both have good reception.

- MetroPCS has good reception while T-Mobile has fair reception.

- CREDO and Verizon both have good reception.

- Boost has very good reception while Sprint has poor reception.

- GreatCall and Verizon both have good reception.

- Virgin has good reception while Sprint has poor reception.

In the majority of cases, carriers beat their host networks. The massive differences between Cricket/AT&T and Boost/Sprint are especially concerning. In no cases do host operators beat the carriers that piggyback on their networks. I would have expected the opposite outcome. Host networks generally give higher priority to their direct subscribers when networks are busy.

The rankings are broken.

What’s the problem?

I see two especially plausible explanations for why the survey results aren’t valid for comparison purposes:

- Non-independent scoring – Respondents may take prices into account when assessing metrics other than value. If that happens, scores won’t be valid for comparisons across carriers.

- Selection bias – Respondents were not randomly selected to try certain carriers. Accordingly, respondents who use a given carrier probably differ systematically from respondents that use another carrier. Differences in scores between two carriers could reflect either (a) genuine differences in service quality or (b) differences in the type of people who use each service.

Consumer Reports, please do better!

My earlier blog post about Consumer Reports’ methodology is one of the most popular articles I’ve written. I’m nearly certain staff at Consumer Reports have read it. I’ve tried to reach out to Consumer Reports through two different channels. First, I was ignored. Later, I got a response indicating that an editor might reach out to me. So far, that hasn’t happened.

I see three reasonable ways for Consumer Reports’ to respond to the issues I’ve raised:

- Adjust the survey methodology.

- Cease ranking cell phone carriers.

- Continue with the existing methodology, but mention its serious problems prominently when discussing results.

Continuing to publishing rankings based on a broken methodology without disclosing problems is irresponsible.