Ookla recently published its Q2 report on the performance of U.S. wireless networks. As I’ve discussed before, I’m not a fan of Ookla’s methodology.1 Because of my qualms, I’m not going to bother summarizing Ookla’s latest results. However, I do want to draw attention to a part of the recent report.

Ookla’s competitive geographies filter

In the last year or two, Ookla has restricted its main analyses to only account for data from “competitive geographies.” Here’s how Ookla explains competitive geographies:

The competitive geographies filter mitigates some of the problems with Ookla’s methodology but also introduces a bunch of new issues.

Availability

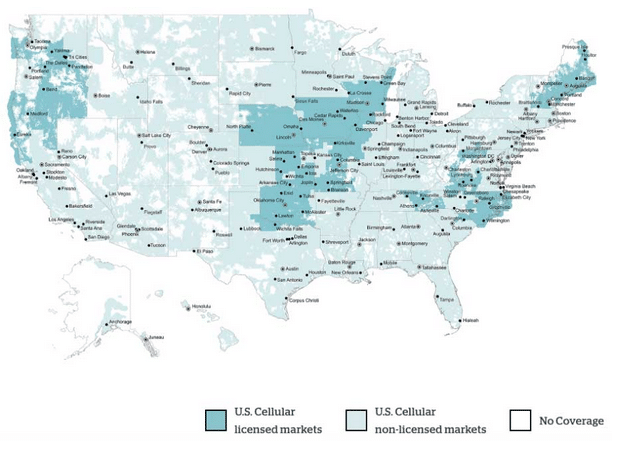

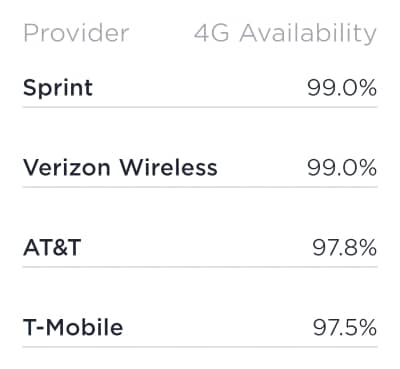

Ookla’s latest results for 4G availability illustrate the issues:

Sprint unambiguously has the smallest coverage profile of the four nationwide networks.2 The competitive geographies filter makes Ookla’s availability metric so meaningless that Sprint can nevertheless tie for the best availability.

Lots of regions only have coverage from Verizon. All those data points get thrown away because they come from non-competitive geographies. Other areas have coverage from only Verizon and AT&T. Again, those data points get thrown out because they’re not from competitive geographies. What’s the point of measuring availability while ignoring the areas where differences in network availability are most substantial?

Giving Ookla credit

Despite my criticisms, I want to give Ookla some credit. Many evaluators develop complicated, poorly thought-out metrics and only share those metrics when the results seem reasonable. I appreciate that Ookla didn’t hide its latest availability information because the results looked silly.