PCMag just released its 2020 report on the performance of cellular networks.

- Verizon took the top spot for overall performance.

- AT&T came in a close second.

- T-Mobile came in third place but led in 5G availability.

Differences from previous years’ tests

Due to logistical issues from the pandemic, PCMag altered its methodology:

PCMag also started placing more emphasis on 5G connections. I’m a big fan of how PCMag handled 5G performance in its scoring (emphasis mine):

Reservations

Most of my reservations last year still stand. Notably:

- PCMag focuses on performance within cities, while the largest differences between networks’ performance tend to show up in less-populated areas.

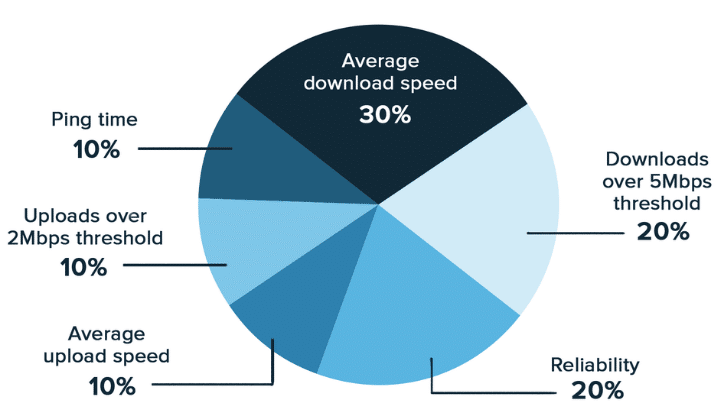

- Average speed metrics get more weight than I think is reasonable.

- Scores on different metrics get aggregated in a problematic way.

Highlights

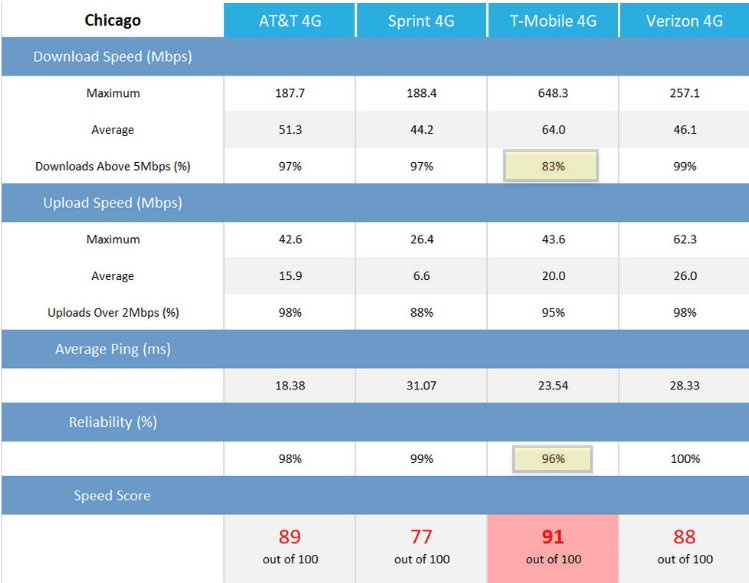

While my reservations are serious, they’re not relevant to the granular, city-specific results. If you live in a large metro area, PCMag’s scorecard for your city could be handy.

The 5G-availability data is interesting. Here’s each network’s overall score for 5G availability:

- T-Mobile: 54%

- AT&T: 38%

- Verizon: 4%

I’m surprised how close AT&T came to T-Mobile. While Verizon’s 4% availability score isn’t impressive, it’s higher than I anticipated. Verizon has been getting berated for the horrible availability of its exclusively millimeter wave 5G. Since Verizon hasn’t rolled out any 5G in some cities, the overall result masks heterogeneity between cities. E.g., PCMag found 9% 5G availability for Verizon in Chicago.